How OnlineOrNot more than halved its AWS bill

Max Rozen / Published: October 28, 2024

Chances are, you've heard one of the main promises of serverless compute: "you only pay for what you use". While convenient when starting a new project, once you start to get continuous usage on that compute, you start to realize you're paying a hefty premium for that convenience.

If you're using AWS Lambda like I am, chances are that "for what you use" part isn't completely true either. If your function spends the majority of its time waiting for I/O to complete (fetching external URLs, querying DBs), you're paying a serverless premium mostly for your compute to sit around, waiting for something to do.

My solution to this was to migrate to Cloudflare Workers. With Workers, I went from paying up to 30000ms for a function to wait around while fetching a URL, to paying for the 1.4ms (p99) of CPU time to process the data before and after fetching.

Table of contents:

- The project

- "But why don't you just use VMs?"

- Why Workers worked out to be significantly cheaper

- The approach to moving off AWS Lambda

- Rolling out the change

- It's faster, too?

- Summary

The project

OnlineOrNot is a bootstrapped business that provides status pages with uptime monitoring attached, for software teams. When I started out, it was the 200th alternative uptime monitor for the Internet. To save money on the off-chance no one other than me used it, I built it on AWS Lambda. It did indeed cost nothing while no one was using it.

Building an uptime checker is a simple matter of code, you need:

- a process to query a database, find checks that are ready to be run, and queue them up in the right location

- a process that picks up checks, runs them, and sends the results somewhere

On AWS Lambda, this worked out to be a single function for picking up checks, an SNS topic, another function for running checks, a queue for batching writes to the DB, and a final function for writing results to the DB.

Fast-forward to a few weeks ago, and the vast majority of OnlineOrNot's cloud spend came from paying for AWS Lambda, for functions that spent most of their time waiting for things to do.

"But why don't you just use VMs?"

I've tried moving off of AWS Lambda before, too.

More than two years ago, after running OnlineOrNot for a bit and seeing costs steadily grow, I tried moving the service to continuously running VMs. The particular vendors I picked at the time struggled to maintain more than a few nines of reliability, which is awkward when you're trying to be accurate at measuring the reliability of others.

Long story short, I moved back to AWS, happy to continue paying the premium, knowing it would at least be reliable.

Why Workers worked out to be significantly cheaper

If you're curious, there's a good explanation of how Workers works, but the gist of it is: classic serverless platforms run a chunky VM under the hood, which can't be easily paused and restarted while waiting for I/O to complete, while Workers is built on the V8 runtime where the isolates that run your code are so lightweight that they can be easily restarted.

The most expensive part of my AWS Lambda functions was waiting for DB queries to finish and fetching external URLs - Cloudflare doesn't bill for that.

So while Workers does provide significantly cheaper compute, what stopped me (until recently) from using them as the main compute for my own applications was the inability to talk directly to my existing database.

and then Workers started talking to Postgres

Cloudflare announced Hyperdrive back in late 2023, it became generally available in April 2024, and it was exactly what I was looking for to unlock building entire applications on Workers.

Hyperdrive lets you query Postgres/MySQL databases from Cloudflare Workers (it's a connection pooler with an optional query cache service built-in).

The approach to moving off AWS Lambda

I was initially worried about how much time I'd spend rewriting, but it turned out that all of my AWS Lambda code was compatible with Cloudflare Workers. In the move I decided to change my Postgres client library from pg-promise to Postgres.js (Hyperdrive's recommended library), and remove the queue for batching writes, since I now had a connection pooler.

I started by creating a new Durable Object with a single purpose: run an alarm every 15 seconds, and start the checker.

Next, I moved the check-fetcher function across. On AWS, this would talk to SNS topics to send checks to the right region. I needed a Cloudflare-native means of placing Workers in different regions around the world. To do this, I reached for Durable Objects, with their location hints.

So I created another new Durable Object cleverly named "placer" with a single purpose: run a Worker in the region you wake up in.

The Worker itself I copied over from AWS Lambda, and since it used native JavaScript, it didn't need rewriting.

Finally I moved over the function to upload results to the database, it too was fully compatible with Workers, but I chose to change from pg-promise's db.one/db.many syntax to Postgres.js's sql syntax because I liked it better.

Rolling out the change

To start with, every check in my database has a "version" field, that I use to control which runtime it should run on. This made for a nice feature-flag for opting customers into the new system.

I wasn't too worried, as I had been using Workers to double-check failing uptime checks from AWS for years now, but there are always edge-cases.

I started by running my personal checks on Cloudflare for a few days, before finding some early adopters willing to try it out, then moving over the free tier, followed by the paid tier of users.

It's faster, too?

While it's well documented that when you allocate less RAM to an AWS Lambda function, you also get less CPU, I didn't realize that also meant they were throttling network performance.

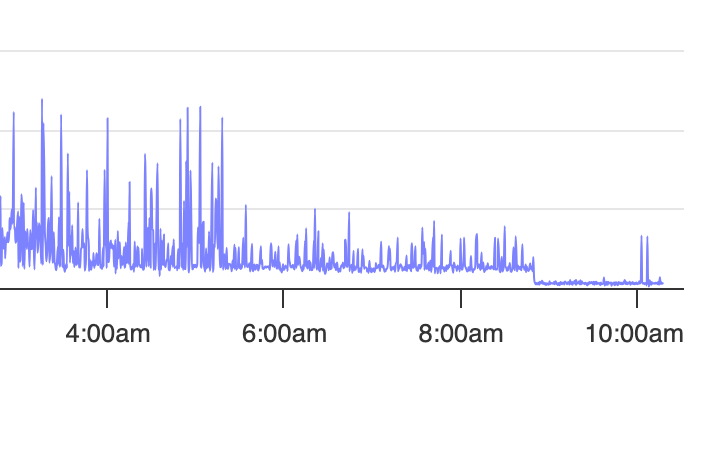

In moving OnlineOrNot's checks to run primarily from Cloudflare, I noticed response time dropped by up to 75%:

Attempting to investigate further lead me to this answer on the AWS forums:

Yes, the network performance will increase with memory. There are no specifics as there is no definitive performance since Lambda compute is abstracted from you and it is also protocol and application specific. You should benchmark your function with various memory sized to determine where you meet your requirements.

Summary

To wrap it up, OnlineOrNot is now "multi-cloud": there are multiple replicas of the system ready to run (for free while no one uses it) on AWS, and a version of the system running natively on Cloudflare, where checks complete faster, with running costs of less than half of what I was paying for AWS Lambda.

As we are bootstrapped, this accelerates the timeline for hiring additional help for the business, and will improve our support and product overall in the long term.