Scaling AWS Lambda and Postgres to thousands of simultaneous uptime checks

Max Rozen / Published: September 16, 2023

When you're building a serverless web app, it can be pretty easy to forget about the database. You build a backend, send some data to a frontend, write some tests, and it'll scale to infinity with no effort, right?

Not quite.

Especially not with a tiny Postgres server. As the number of users of your frontend increases, your app will open more and more database connections until the database is unable to accept any more.

That's just the frontend - it gets worse on the backend.

If you're using AWS Lambda functions as asynchronous "job runner" functions that increase in usage as your web app becomes more popular, you're going to run into scaling issues fast.

By revisiting my app's architecture, I removed the need for each worker function to talk to my database, and greatly improved scalability without needing to pay for a bigger database.

Curious? Read on.

Table of contents

First, some context

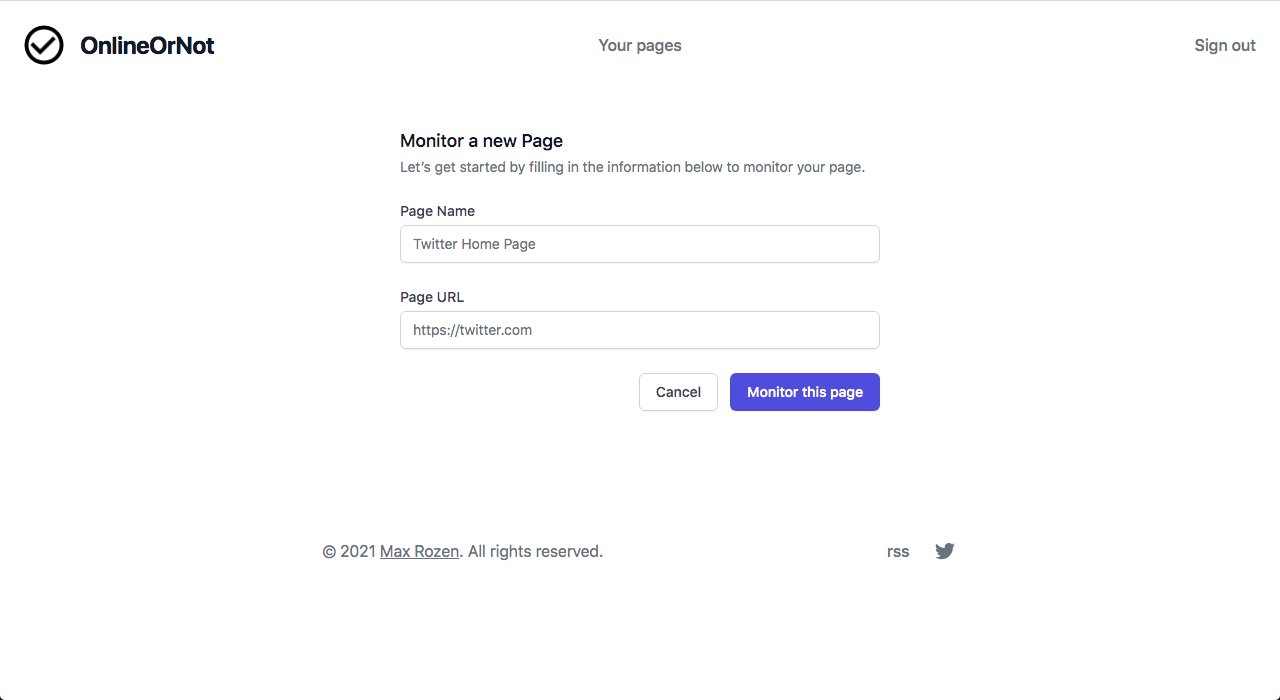

OnlineOrNot (where this article is hosted) started its life as an extremely simple uptime monitoring service. As a user you could sign in, add a URL you wanted to monitor, get alerts (only via email), and pay for a subscription.

Architecture-wise, it was pretty simple too.

It consisted of:

- a single AWS RDS database instance running Postgres

- a Lambda function that fired every minute to check the database for URLs to check

- an SQS queue

- a Lambda function that got triggered via SQS, which would check the URLs, and write the results to the database

The scalability issue

If you have a bit of experience with building on serverless, you can probably immediately see where my mistake was. As the web app grew in popularity, each URL that got added for monitoring would need its own database connection when writing results.

That might have been fine if I was developing an internal uptime checker at a small company (assuming you build for the problems you know you have now, rather than problems you might have), but I'm running OnlineOrNot as a growing bootstrapped business.

Shortly after thinking to myself "this is fine", I found myself needing to check up to 100 URLs at a time, every minute. Each time the jobs would finish, all 100 Checker Lambda functions would try to write to the database at (more or less) the same time, my database would refuse to open any more connections, including from my frontend!

To buy myself time, I scaled up the database a few tiers (the more memory your RDS Postgres instance has, the more simultaneous connections it can receive), and went looking for ways to fix the issue.

Fixing the issue

While implementing a fix for the issue, I managed to land my largest customer to that point. They wanted to monitor over a thousand URLs at a time, every minute.

I ran the numbers - if I kept upgrading database tiers every time I needed to scale, I would quickly run out of database tiers to upgrade to.

The fix in the end was to:

- Instead of letting each checker Lambda write to the database, I had them return their result via Simple Notification Service (SNS)

- Added a second queue, that batches results from SNS

- Made a new AWS Lambda function to receive batched results, and write to the database from there

The architecture ended up looking like this:

As a result, the application now scales relative to how many resultfwdr functions are running, rather than the individual checker Lambda functions. This pattern is also known as fan-out/fan-in architecture.

With this fix in place, I was able to downgrade my database instance back to where I started, with only a minor percentage increase in CPU usage.